Siamese Network

Kind of One Shot Classification Network. Require that you have just one training example of each class you want to predict on. The model is still trained on several instances, but they only have to be in the similar domain as your training example.

A nice example would be facial recognition. You would train a One Shot classification model on a dataset that contains various angles , lighting , etc. of a few people. Then if you want to recognize if a person X is in an image, you take one single photo of that person, and then ask the model if that person is in the that image

Siamese Network

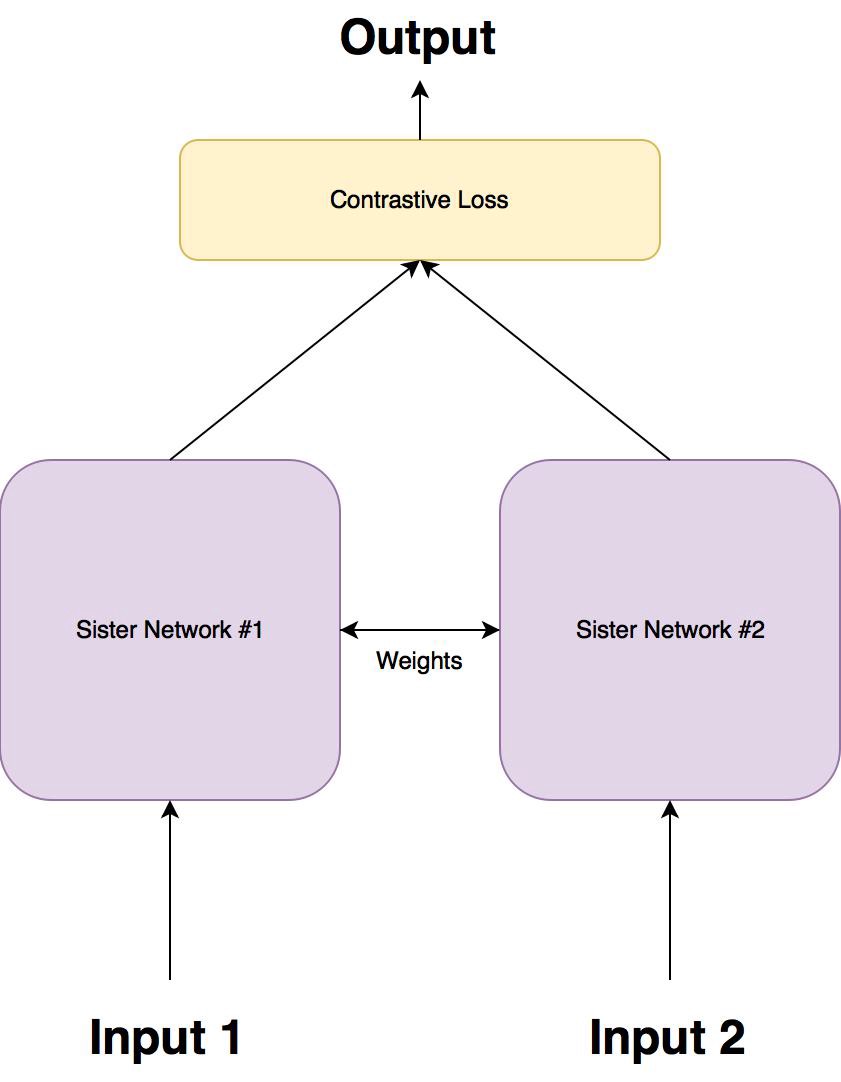

A Siamese networks consists of two identical neural networks, each taking one of the two input images. The last layers of the two networks are then fed to a contrastive loss function , which calculates the similarity between the two images. I have made an illustration to help explain this architecture.Figure 1.0

There are two sister networks, which are identical neural networks, with the exact same weights.

Each image in the image pair is fed to one of these networks.

The networks are optimised using a contrastive loss function(we will get to the exact function).

Contrastive Loss function

The objective of the siamese architecture is not to classify input images, but to differentiate between them. So, a classification loss function (such as cross entropy) would not be the best fit. Instead, this architecture is better suited to use a contrastive function. Intuitively, this function just evaluates how well the network is distinguishing a given pair of images.

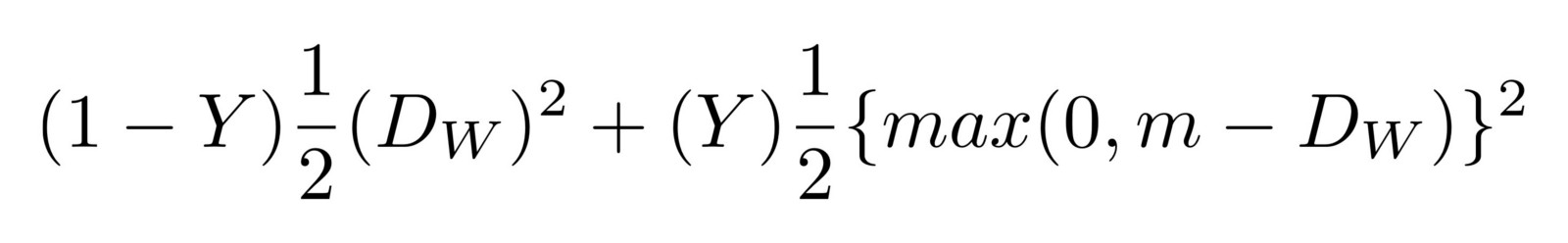

The contrastive loss function is given as follows:

where Dw is defined as the euclidean distance between the outputs of the sister siamese networks. Mathematically the euclidean distance is

where Gw is the output of one of the sister networks. X1 and X2 is the input data pair.

Y is either 1 or 0. If the inputs are from the same class , then the value of Y is 0 , otherwise Y is 1。 max() is a function denoting the bigger value between 0 and m-Dw.

m is a margin value which is greater than 0. Having a margin indicates that dissimilar pairs that are beyond this margin will not contribute to the loss. This makes sense, because you would only want to optimize the network based on pairs that are actually dissimilar , but the network thinks are fairly similar.

Architecture

he weights are constrained to be identical for both networks, we use one model and feed it two images in succession

Siamese

The contrastive loss in PyTorch looks like this:

Last updated